NuwaUnity : Nuwa Robot SDK for Unity

NuwaUnity : Nuwa Robot SDK for UnityUnity/Android versionInstallScenesMotionTTSTouchLEDMotor controlMotor angle range tableMovement controlLocal ASRCloud Speech To Text and Local ASR mix operationSpeech2TextFaceTrackControl FaceQ & A

###Feature list:

MotionTouchMotorControl FaceLEDTTSin app localcommandSpeed2TextRecognizeFace_trackFace_RecognizeMovementConnection

Unity/Android version

Unity version 2018.4.0 later android SDK Min version : 6.0

Install

After decompress zip file, you can see NuwaUnity_Core.UnityPackage and NuwaUnity_sample.UnityPackage.

drag these package into Unity3D and import these files.

if you got error like namespace "nuwa" could not be found, you may only import NuwaUnity_Sample. import NuwaUnity_Core.UnityPackage to slove the problem

Scenes

All DemoScene are in Assets/NuwaUnity/Scene

Drag all scene from [Assets/NuwaUnity/Scene] into Scene in Build, and set [NuwaUnity/Scene/Demo_Title] as first Scene.

Motion

Unity Scene : Demo_Motion_Play

Nuwa motion file is nuwa's private Robot motion control format. Which is composed of "MP4(Face)", "Motor control", "Timeline control", "LED control", etc. You can just play a motion, and Robot will do a serials of pre-defined actions. PS: Some motions only include "body movements" without "MP4(Face)"

motionPlay(final String motion, final boolean auto_fadein)

- motion: motion name, ex: 666_xxxx

- auto_fadein: Screen will show a Surface Window for motion include Face, the param of "auto_fadein" needs to set as "true"

motionPlay(final String name, final boolean auto_fadein, final String path)

motionPrepare(final String name)

motionPlay( )

motionStop(final boolean auto_fadeout)

- auto_fadeout: stop playing a motion and hide the overlap window as well

getMotionList( )

motionSeek(final float time)

motionPause( )

motionResume( )

motionCurrentPosition( )

motionTotalDuration( )

x// use default NUWA motion asset pathNuwa.motionPlay(string motion_name);// give a specefic motion asset path (internal use only) Nuwa.motionPlay("001_J1_Good", false, "/sdcard/download/001/"); //NOTICE:Please must call motionStop(true), if your auto_fadein is true.// will get callback of onStopOfMotionPlay(String motion)Nuwa.motionStop();// pause, resumeNuwa.motionPause();Nuwa.motionResume();

TTS

Unity Scene : Demo_tts

Robot can speak out a sentance by a giving string

- startTTS(final String tts)

- startTTS(final String tts, final String locale)

- pauseTTS()

- resumeTTS()

- stopTTS()

xxxxxxxxxxNuwa.startTTS("Nice to meet you");// you can cancel speaking at any timeNuwa.startTTS.stopTTS();// or Used specific language speak (Please reference TTS Capability for market difference)Nuwa.startTTS.startTTS(TTS_sample,"en_us");// receive callback onTTSComplete(boolean isError) of VoiceEventListenerNuwa.onTTSComplete += onTTSComplete(boolean isError) {}TTS Capability (Only support on Kebbi Air)

xxxxxxxxxx* Taiwan Market : Locale.CHINESE\Locale.ENGLISH* Chinese Market : Locale.CHINESE\Locale.ENGLISH* Japan Market : Locale.JAPANESE\Locale.CHINESE\Locale.ENGLISH* Worldwide Market : Locale.ENGLISH

Touch

Unity Scene : Demo_Touch

Robot provides Touch events. While you need it, you can request it. You can get TouchEvent Callback from touch head, chest, right hand, left hand, left face, right face.

xxxxxxxxxxprivate void Start(){ Nuwa.onTouchBegan += OnTouchBegin; Nuwa.onTouchEnd += OnTouchEnd; Nuwa.onTap += OnTap; Nuwa.onLongPress += OnLongPress;}// type: head: 1, chest: 2, right hand: 3, left hand: 4, left face: 5,right face: 6.public void OnTouchBegin(Nuwa.TouchEventType type){}public void OnTouchEnd(Nuwa.TouchEventType type){}public void OnTap(Nuwa.TouchEventType type){}public void OnLongPress(Nuwa.TouchEventType type){}LED

Unity Scene : Demo_LED

There are 4 parts of LED on Robot. API can contorl each them. (Head, Chest, Right hand, Left hand) Each LED part has 2 types of modles - "Breath mode" and "Light on mode" Before using it, you need to use API to turn on it first, and turn off it while unneeded. LED default controled by System. If the App wants to have different behavior, it can be disabled by disableSystemLED() If your App needs to control Robot LED, App needs to call disableSystemLED() once,and App call enableSystemLED() while App is in onPause state.

xxxxxxxxxxNOTICE : Kebbi-air not support FACE and Chest LED Breath mode.

- enableLed(final int id, final int onOff)

- setLedColor(final int id, final int brightness, final int r, final int g, final int b)

- enableLedBreath(final int id, final int interval, final int ratio)

- enableSystemLED() // LED be controlled by Robot itself

- disableSystemLED() // LED be controlled by App

xxxxxxxxxx/*id:1 = Face LEDid:2 = Chest LEDid:3 = Left hand LEDid:4 = Right hand LEDonOff: 0 or 1brightness, Color-R, Color-G, Color-B: 0 ~ 255interval: 0 ~ 15ratio: 0 ~ 15*///disable system led,Nuwa.disableSystemLED();//use this to control led by appNuwa.enableLed(Nuwa.LEDPosition, bool);Nuwa.setLedColor(Nuwa.LEDPosition , Color);//設定部位的LED顏色// turn on LEDNuwa.enableLed(1, 1);Nuwa.enableLed(2, 1);Nuwa.enableLed(3, 1);Nuwa.enableLed(4, 1);// Set LED colormRobot.setLedColor(1, 255, 255, 255, 255);mRobot.setLedColor(2, 255, 255, 0, 0);mRobot.setLedColor(3, 255, 166, 255, 5);mRobot.setLedColor(4, 255, 66, 66, 66);// Switch to "Breath mode"mRobot.enableLedBreath(1, 2, 9);// turn off LEDmRobot.enableLed(1, 0);mRobot.enableLed(2, 0);mRobot.enableLed(3, 0);mRobot.enableLed(4, 0);

Motor control

Unity Scene : Demo_Motor

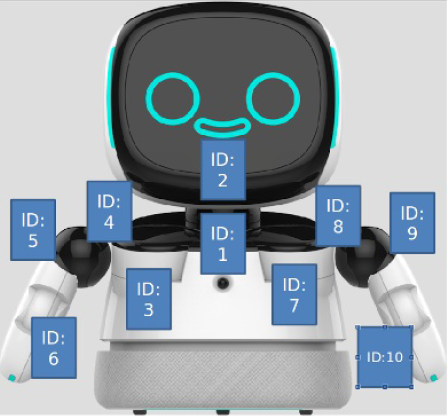

Mibo Robot has 10 motors, use the API, you can control each of them

ctlMotor(final int motorid, final int speed, final float setPositionInDegree, final float setSpeedInDegreePerSec)

motorid:

- neck_y:1

- neck_z:2

- right_shoulder_z:3

- right_shoulder_y:4

- right_shoulder_x:5

- right_bow_y:6

- left_shoulder_z:7

- left_shoulder_y:8

- left_shoulder_x:9

- left_bow_y:10

speed: always 0

setPositionInDegree: target positon in degree

setSpeedInDegreePerSec: speed in degree per sec (range: 0 ~ 200)

Motor angle range table

xxxxxxxxxx| ID | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 ||---|----|----|----|----|----|----|----|----|---|----||Max| 20 | 40 | 5 | 70 | 100| 0 | 5 | 70 |100| 0 ||Min|-20 |-40 | -85|-200| -3 |-80 |-85 |-200|-3 |-80 |

Example:

xxxxxxxxxx//Get neck_y Motor DegreeNuwa.getMotorPresentPossitionInDegree(Nuwa.NuwaMotorType.neck_y)//get motor degreeNuwa.setMotorPositionInDegree((int)type, (int)motorRotateDegree, (int)motorSpeed);

Movement control

Unity Scene : Demo_movement

To control Robot to forward, backwards, turns, stop

Low level control:

- move(float val) max: 0.2(Meter/sec) go forward, min: -0.2(Meter/sec) go back

- turn(float val) max: 30(Degree/sec) turn left, min: -30(Degree/sec) turn right

Advanced control:

- forwardInAccelerationEx()

- backInAccelerationEx()

- stopInAccelerationEx()

- turnLeftEx()

- turnRightEx()

- stopTurnEx()

// go forward

Nuwa.forwardInAccelerationEx();

// go back

Nuwa.backInAccelerationEx();

// stop

Nuwa.stopInAccelerationEx();

Local ASR

Demo_LocalCommand

ASR engine use SimpleGrammarData to describe the list of keyword, App needs to create Grammar first. (Command table)

- createGrammar(final String grammar_id, final String grammar_string)

- startLocalCommand()

- stopListen()

Example:

- prepare Grammer Info

strin mi_Name; //grammer's title, you can set value you like

string[] values; //string to recognize

/*

mi_Name = "robot";

values = new string[2] {"hello","how are you"};

*/

Nuwa.prepareGrammarToRobot(mi_Name, values);

- Register Event callback

Nuwa.onGrammarState += OnGrammarState; // OnSetup Grammer Finish

Nuwa.onLocalCommandComplete += TrueFunction; //get complete event

Nuwa.onLocalCommandException += FalseFunction; //get exception event

3.After get on GrammerState Event Callback, start localcommand

void OnGrammarState(bool isError, string info)

{

Debug.Log(string.Format("OnGrammarState isError = {0} , info = {1}", isError, info));

//start LocalCommand

Nuwa.startLocalCommand();

}

You can get Json from onLocalCommandComplete Event, the json file should look like this:

{

"result": "測試",

"x-trace-id": "ef73bd1252544f30a818b0f68a6a72c7",

"engine": "IFly local command",

"type": 1,

"class": "com.nuwarobotics.lib.voice.ifly.engine.IFlyLocalAsrEngine",

"version": 1,

"extra": {

"content": "String"

},

"content": "{\n \"sn\":1,\n \"ls\":true,\n \"bg\":0,\n \"ed\":0,\n \"ws\":[{\n \"bg\":0,\n \"cw\":[{\n \"w\":\"測試\",\n \"gm\":0,\n \"sc\":67,\n \"id\":100001\n }],\n \"slot\":\"<NuwaQAQ>\"\n }],\n \"sc\":68\n}"

}

If the returned JSON file is empty, it means that the requested input content was not recognized.

Cloud Speech To Text and Local ASR mix operation

Unity Scene : Demo_MixUnderstand

While doing ASR Mix mode, engine will receive results from local and cloud, engine will only return one of both. Rules are:

- if engine get local result, it will only return local result

- if engine get no local result, it will return ASR result if Internet is available

PS: Cloud ASR and local ASR result are json format string.

createGrammar(final String grammar_id, final String grammar_string)

startMixUnderstand( )

- The local result is returned first. If there is no local result, the cloud NLP result will be returned. The grammar table must be built before use the api

stopListen( )

Example:

- prepare Grammer Info

strinExceptiong mi_Name; //grammer's title, you can set value you like

string[] values; //string to recognize

/*

mi_Name = "robot";

values = new string[2] {"hello","how are you"};

*/

Nuwa.prepareGrammarToRobot(mi_Name, values);

- Register Event callback

Nuwa.onGrammarState += OnGrammarState; //Get Grammer setup complete

Nuwa.onMixUnderstandComplete += MixUnderstandFunction; //get mixunderstand result

Nuwa.onLocalCommandComplete += TrueFunction; //get localcomand complete

Nuwa.onLocalCommandException += FalseFunction; //get localcommand exception

3.After get on GrammerState Event Callback, call start localcommand

void OnGrammarState(bool isError, string info)

{

Nuwa.startLocalCommand();

}

You can get Json from onLocalCommandComplete Event, the LocalCommand json file should look like this:

{

"result": "測試",

"x-trace-id": "2ed6670726b47c5a3ac006aafa9216d",

"engine": "Google Cloud",

"type": 1,

"class": "com.nuwarobotics.lib.voice.hybrid.engine.NuwaTWMixEngine",

"version": 1,

"extra": {

"content": "String"

},

"content": "{\"sn\":1,\"ls\":true,\"bg\":0,\"ed\":0,\"ws\":[{\"bg\":0,\"slot\":\"<MiboMixunderstand>\",\"cw\":[{\"id\":10001,\"w\":\"測試\",\"sc\":96,\"gm\":0}]}],\"sc\":94}"

}

and mixunderstand's json sould look like this:

{

"result": "123",

"x-trace-id": "e5e37c47dc4649b08cf71015c8d1b69e",

"engine": "Google Cloud",

"type": 2,

"class": "com.nuwarobotics.lib.voice.hybrid.engine.NuwaTWMixEngine",

"version": 1

}

If the returned JSON file is empty, it means that the requested input content was not recognized.

Speech2Text

Unity Scene : Demo_Speech2Text

- set calback:

Nuwa.onSpeech2TextComplete += SpeechCallback;

- Call SpeechToText

Nuwa.setListenParameter(Nuwa.ListenType.RECOGNIZE, "language", "en_us");

Nuwa.setListenParameter(Nuwa.ListenType.RECOGNIZE, "accent", null);

Nuwa.startSpeech2Text(false); //dont need wakeup, set false

The returned string is text and can be used directly.

In addition, this feature requires internet connection. If the Robot's system time is not the same as the time zone of the current location, it is very likely that the returned string will be empty.

FaceTrack

Unity Scene : Demo_FaceTrack

//1. Register Get HumanFaceTrack Event

Nuwa.onTrack += GetTrackData;

//2. send requeset and wait 2 second to get result

Nuwa.startRecognition(Nuwa.NuwaRecognition.FACE);

//3. receive callback and set value

void GetTrackData(Nuwa.TrackData[] data)

{

float _x = float.Parse(data[0].x); //only get first person's face

float _y = float.Parse(data[0].y);

float _w = float.Parse(data[0].width);

float _h = float.Parse(data[0].height);

FaceOriginPos = new Vector2(_x, _y); // set face pos

FaceOriginSize = new Vector2(_w, _h); // set face size

FaceCenterPos = FaceOriginPos + (FaceOriginSize / 2f); // set face center

}

// Received data should look like this:

// {"height":175,"width":175,"x":250,"y":93}

Control Face

Scene : Demo_ControlFace

// use

Nuwa.ShowFace();

Nuwa.HideFace();

Nuwa.MouthOn();

Nuwa.MouthOff();

string motionID; //kebbi's motion id

Nuwa.PlayMotion(motionId);

int x,y; //face window's start pos(x,y)

int w,h; //分別為凱比的寬跟高,凱比臉的正常大小為1024x600

int w,h; //face windows's width and height, the default screen size is 1024*600

Nuwa.ChangeFace(x, y, w, h);

If you want exit app after use ShowFace(), you must need set face's screen size to (1024,600) and call HideFace().

Q & A

Q1: Why it dodn't work while App calls "mRobot.motionPlay("001_J1_Good", true/false)"? Ans:

- check the callback of "public void onErrorOfMotionPlay(int errorcode) to know the error info"

- make sure the motion name is valid

- check the motion list to get the correct motion name

[Kebbi Motion list] https://dss.nuwarobotics.com/documents/listMotionFile

Q2: Why App can not control LED? Ans:

- App needs to call disableSystemLED() once first. Ex: Do it in onCreate() state.

- App needs to call enableSystemLED() while App closed. Ex: Do it in onPause() state.

Q3: Unity got [xxx_gameObject can only be called from the main thread] Exception

- Unity Cannot use Unity's Build in variable and function when Received callback from android, like use xxx.gameobject.SetActive(true/false);

- To slove this problem, you need wait one frame and call it in Update()

- You can use UniRx Plugin to slove this problem. use Observable.NextFrame() to put the part that needs to be executed in the next frame.

https://forum.unity.com/threads/unity-android-main-ui-thread.174553/